In the past, there was a clear demarcation between the role of the enterprise network and the internet. Network architects focused on the networks that were under their direct control, and relied on their service provider to provide access to the internet.

With the rise of cloud applications, we’re seeing a shift in the demarcation. That’s because network architects have key business applications that are hosted in the cloud, and now they have to think about network performance in a different light, with access to the applications following traffic paths that are mostly outside of their network’s boundaries. Additionally, each application also has unique requirements around network performance that must be considered. For example, webinar (one-to-many) streaming needs high bandwidth, real-time collaboration needs low latency, and backend systems hosted in virtual private clouds may have very high resilience and redundancy requirements. Further complicating matters, unlike private applications, cloud applications don’t have a predictable set of IP addresses and ports, and are constantly changing and evolving, making them a nebulous and ever-changing target. Perhaps the term “cloud” is even more apropos than we realized!

Security, of course, is a critical enabler of applications, and that’s why the Secure Access Service Edge (SASE) has now emerged as an important conceptual model for describing how to protect users and applications that operate beyond the traditional network perimeter. SASE is a model that recognizes that the location of the user and the application can no longer be thought of as fixed. In other words, the traditional castle-and-moat approach to application and network security doesn’t protect people who aren’t in the castle.

Netskope’s platform engineering team is responsible for designing and operating NewEdge, the private security cloud on which the Netskope Cloud Security Platform runs. There’s a tremendous amount of interest from customers wanting to know how to support and secure their complex mix of applications, managed (IT-led), unmanaged (Shadow IT), on-prem, private apps in the cloud, third-party SaaS, and more. In many conversations about SASE, I find it very helpful to draw upon my decade of experience in the CDN space to walk through the elements that factor into the path that end-user traffic takes under various scenarios. This is because SASE is a new topic for everyone. The architecture of carrier networks, cloud providers, CDNs, and the internet is not necessarily widely understood, and sometimes the language for many concepts is not standardized across the industry.

Take the term “data center,” for example. In my world, a data center meant a physical building with concrete walls, raised floors, and carefully monitored environment controls. I’ve always presumed that if you told me that you have two data centers, you own two such buildings, like the famous NAP of the Americas in Miami, Florida (congratulations!). Today, only colocation facilities, telcos, a handful of managed service providers, and a very small number of enterprises own and build their own “data centers.” In fact, many colocation providers don’t own the building, they just lease space within a larger data center. In contrast, a point of presence (POP) is a location where you have placed servers, but don’t own or operate the data center. Yet in the security space, you will often see “POPs” referred to as data centers, which can sometimes cause confusion.

Semantics aside, I now recognize that the definition of data center is changing, and that the easiest way to facilitate a discussion about points of presence is to use the language that is the most widely understood in the security space. In this article and in the future, I’ll use “data center” to refer to any location where servers or network equipment is present.

As an architect, I love discussions with our customers about end-to-end latency and how to ensure a great user experience. This is easy to do with a big empty white board and fresh box of dry erase pens. It’s a bit harder to have the same discussion in writing, when we need to first check if we are using terms in the same way. This is why I felt that it is prudent to explain the “path of the packet” in this blog.

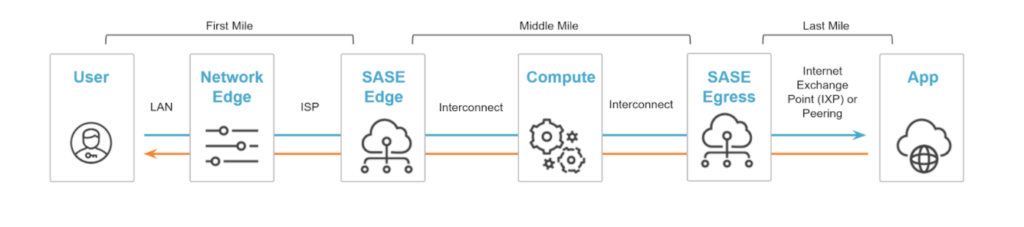

In the Secure Access Service Edge (SASE) model for delivering cloud-based security services, the “packet” flows through several logical components and boundaries, and it’s useful to provide them with names in order to clarify what’s what.

First Mile

Generally speaking, users could be in a number of different locations. They might be located at the office or at home, and they need to use a cloud-based application. In the first mile, the user’s request to access an application traverses a local area network to the network’s edge.

For users working from home, the user and the network edge are in the same building. On enterprise networks, there are generally far fewer network edges than offices or campuses. While SASE is an enabler of “local internet breakout,” most organizations still concentrate their network edges to one or a few locations.

Given that the user’s location is indeterminate, and the quality of the security at the network edge is questionable or nonexistent, then the protection at the perimeter is also indeterminate at best. If you don’t know if the user’s going to be behind a corporate firewall, then you can’t expect that firewall to deliver visibility or protection. The SASE model addresses this point, by delivering consistent visibility and enforcement of security policy in the middle mile, rather than relying on security measures that may or may not exist in the first mile.

Middle Mile

Secure Access Service Edge isn’t a “destination,” but rather the delivery of networking and security services for traffic en route to the internet, cloud applications, or the data center. When users access applications, the packet has an entry (the SASE edge) and an exit (the SASE egress) point, with the processing of policy in the compute happening at some point in between. Keep in mind, this is a logical model for the purposes of discussion, but with the variety of architectures in the market, it should be noted that there are implementations with edge/compute/egress all in the same data center, and others where either egress or edge is decoupled, but the other two are in the same data center, and some where each function takes place in different data center locations. The nomenclature is a source of confusion given the different ways that these functions operate and are implemented.

Last Mile

It wasn’t long ago that the carrier’s link to the internet was something that was only interesting to other carrier architects. Enterprise network architects might be interested in the traceroute’s total time, but now there is a growing interest in how carriers handle routing to the internet, whether using an internet exchange point (IXP) or if there is a peering relationship.

The basic premise of peering between cloud providers is not new, but it’s come to the forefront for customers that are looking for high performance for specific applications. What’s not widely understood, however, is that there are different types of peering that carriers use, and these words are used in a confusing manner. To briefly summarize:

- Private Peering: Private peering is a direct peering relationship between two networks that provides dedicated capacity that is not shared by any other parties. Like other forms of peering, it is designed to expedite traffic without having to route over the general internet. This type of peering can be implemented via a private network interconnect or via a private exchange.

- Public Peering: A SASE edge data center might reach the internet through an internet exchange point. With public peering, the IXP brokers the peering relationship between the two networks. The peering relationship exists between the IXP and the cloud edge, and thus indirectly between the SASE edge to the cloud edge. In general, this is still better than routing over the general internet, but it should be clear that this isn’t the same as private peering.

Note, in both scenarios, these carrier-level peering relationships are different from the concept of connecting an enterprise data center to a virtual private cloud using a partner circuit, such as ExpressRoute for Azure or Direct Connect for AWS. The basic underlying concept is similar but it’s operating on a different side of the spectrum.

At Netskope, we’re building an innovative carrier-grade private network called NewEdge, designed to provide global coverage for cloud-delivered security. Clearing up the language that we use is helpful for a deeper dive into how it works. Fortunately, one doesn’t have to learn the complexities of operating a carrier network in order to benefit from the user experience it delivers. But it does help develop a deeper appreciation of what we’re doing to build the world’s largest security private cloud.

Now that we’ve covered the basics, keep an eye on this blog for further discussion on what we’re doing to change the world with NewEdge.

Are you looking to learn more about NewEdge? Check out our web page or request a demo to find out more about NewEdge and what it does.

Voltar

Voltar

Leia o Blog

Leia o Blog