The current situation with legacy on-premises security defenses trying to support a hybrid work environment and zero trust principles is challenging for companies. Complications can include poor user experience, complexity of disjointed solutions, high cost of operations, and increased security risks with potential data exposure. Simple allow and deny controls lack an understanding of transactional risk to adapt policy controls and provide real-time coaching to users. The implication of continuing down this path is poor use of resources, limited business initiatives, frustrated users, and an increased potential for data exposure and regulatory fines. The binary option of blocking new applications and cloud services impedes digital transformation progress, and an open allow policy lacks data protection for transactional risk and real-time coaching to users on risky activities.

For example, generative AI has had a firestorm of attention and security teams are reacting quickly as employees, contractors, and business partners leverage the value and economies of scale from this new SaaS application. However, there are risks around what data gets provided to generative AI applications like ChatGPT and Gemini. The popularity also provides an opportunity for cyberattacks to phish, lure, and collect data from oblivious users. Generative AI is a good use case to illustrate the value of zero trust principles with SSE to provide safe access, protect data, and prevent threats related to its popularity.

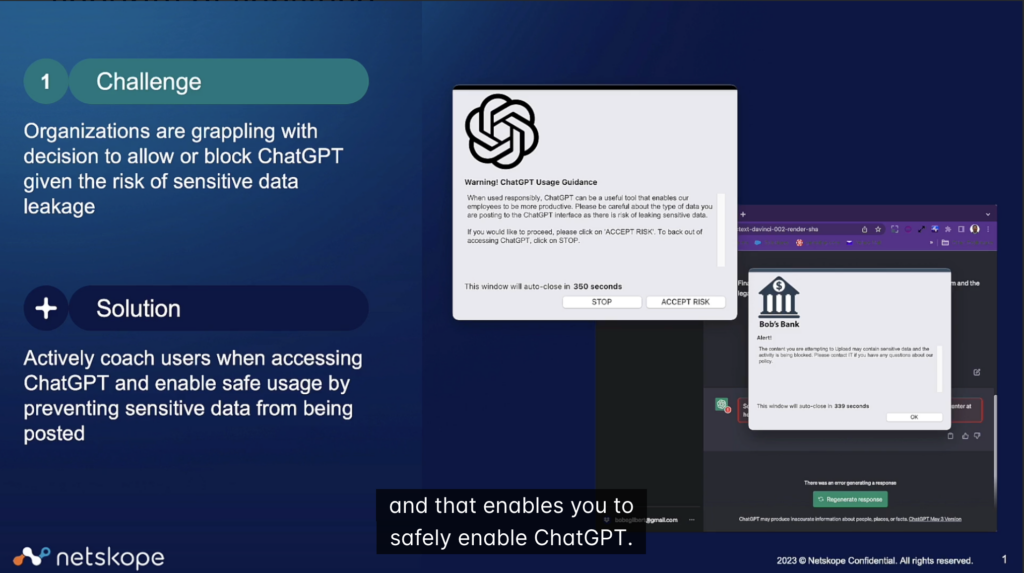

Blocking access to generative AI apps via specific domains or a URL category prevents progress and advancements from using it across many functional areas of an organization, including developing code, creating marketing content, and improving customer support. However, openly allowing access to generative AI apps puts sensitive data at risk from uploads for analysis, and not every AI application or front-end hosted website is low risk and well managed. So, a gray area develops of allowing access with guardrails for data protection, understanding company instances, activities, and when to provide real-time coaching to users. Security service edge (SSE) solutions require app connectors for generative AI applications to decode the application transactions inline to provide adaptive access policy controls, protect data, and coach users in real-time.

For example, if a user with a company identity is accessing ChatGPT, they would receive an alert on best practices for using generative AI, reminding them to avoid submitting sensitive company data. As the user interacts with ChatGPT, data exposed to the application can be inspected for data sensitivity using standard, advanced, and AI/ML classifiers for data loss protection. The company may have also invested in its own private version of the application, and this company instance can have different SSE policy controls than the public version. Monitoring overall use of generative AI applications can be provided by a URL category plus the rich context collected by SSE cloud platforms in advanced analytics for specific application transactions, including cloud context for the app, instance, activity, data sensitivity, collected justifications for use, and any policy alerts. SSE solutions need to assess transactional risk to make adaptive access policy decisions in real time, including generative AI with more details on this webpage and in this video.

So, it is no surprise that data flowing into generative AI applications is at the center of attention for risks to organizations. This use case and many others show why data is at the center of the zero trust security model as it interacts with users, devices, apps, and networks. This makes context and content the new perimeter for users and apps working from any location where hybrid work is the new normal. One of the main goals of implementing zero trust is to make security a business enabler so your organization is more trusted, projects can leverage digital transformation safely, and data is protected in use, in motion, and at rest.

For a deeper perspective, this new white paper takes the position of consolidating security defenses into a SSE cloud platform to reduce security risks and protect data, support zero trust principles, improve user experiences, and enable business agility. This position supports a wider range of use cases with adaptive access policy controls related to transactional risk while providing least privilege access and protecting data. The action required is to apply zero trust principles to SSE for an understanding of how together they open new use cases often required for hybrid work environments and adopting SaaS. The benefits include reducing security risks and protecting data, reducing cost of operations, freeing up full-time employees for new projects, and increasing business agility.

Learn more in the white paper Zero Trust Security Model Applied to Netskope Intelligent SSE.

Atrás

Atrás

Lea el blog

Lea el blog