The rapid rise of generative AI (genAI) applications is reshaping enterprise technology strategies, pushing security leaders to reevaluate risk, compliance, and data governance policies. The latest surge in DeepSeek usage is a wake-up call for CISOs, illustrating how quickly new genAI tools can infiltrate the enterprise.

In only 48 hours, Netskope Threat Labs observed a staggering 1,052% increase in DeepSeek usage across our customer base. With 48% of enterprises seeing some level of activity, this adoption spike highlights the need for robust AI security controls.

DeepSeek’s adoption spike: A familiar pattern in genAI trends

This rapid uptick isn’t unprecedented. Similar patterns emerged with ChatGPT, Google Gemini, and other AI-powered applications. The adoption curve often follows an initial spike driven by curiosity, peaking before tapering off as organizations implement security controls or employees move on to the next trend.

Netskope Threat Labs took a look into regional DeepSeek adoption trends from January 24 to January 29 and observed the following regional increases:

| Region | Observed increase from January 24-29 |

|---|---|

| United States | +415% |

| Europe | +1,256% |

| Asia | +2,459% |

These numbers reflect the speed at which genAI tools spread globally, often outpacing enterprise security teams’ ability to react.

Understanding the risks: Data privacy, compliance, and shadow AI

The widespread adoption of DeepSeek raises crucial security concerns, such as:

- Data privacy and governance: Employees may unknowingly input sensitive data into AI tools, exposing intellectual property and regulatory-protected information.

- Compliance and regulatory risks: Uncontrolled AI adoption can lead to non-compliance with GDPR, CCPA, and industry-specific regulations.

- Shadow AI in the enterprise: Without visibility and governance, unsanctioned AI applications can create security blind spots.

Adding to these concerns, the Netskope AI Labs team recently evaluated the security of the open-source DeepSeek R1 model and found that it was vulnerable to 27% of prompt injection attacks, which was three-times more vulnerable than OpenAI’s o1 model. These findings highlight the very real risks of poorly secured genAI applications, reinforcing the need for enterprises to establish stringent access controls and data governance policies. As adoption surges, enterprises must act swiftly to assess the risks and enforce policies that balance innovation with security.

A strategic approach: Managing AI usage with proactive controls

CISOs and security teams must take a structured approach to genAI governance:

- Gain visibility: Deploy tools that monitor AI usage within the organization to understand who is using these applications and for what purpose.

- Create a culture to drive progress: Don’t fight it—identify workforce needs and provide clear guidance on acceptable use cases and tools. Shift from “No” to “Yes”.

- Enforce AI security policies: Implement policies that regulate data-sharing practices and restrict unauthorized access to AI tools.

- Educate employees: Provide training on the risks of inputting sensitive data into GenAI applications.

How Netskope secures genAI

DeepSeek is the latest, but it won’t be the last standalone or genAI-enhanced SaaS app. Netskope continuously assesses the risk of over 80,000 applications, with new ones added automatically. This allows security and risk management teams to embrace genAI technology that aligns with their risk tolerance while guiding users away from unapproved apps and toward safer alternatives.

Key capabilities include:

- Evaluation of data privacy, compliance, security controls and DR/BCP.

- Providing dynamic access and data security controls based on risk levels.

- Differentiating between corporate and personal AI tool usage, coaching users and steering user traffic toward approved tools.

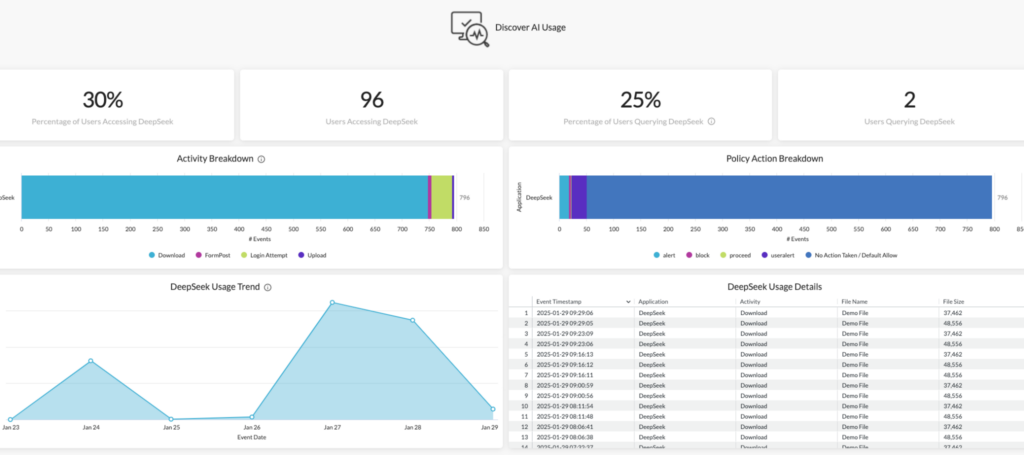

Watch how the Netskope One platform prevents data exposure in DeepSeek R1 while guiding users in real time. The DeepSeek connector is now available in the Netskope Dashboard, enabling seamless integration and real-time security controls for your Generative AI strategy.

Looking ahead: The future of AI in the enterprise

The DeepSeek adoption wave is a glimpse into the future of genAI in the workplace. As AI technology advances, we can expect more rapid adoption cycles driven by several factors, which include:

- Cheaper AI chips & compute power

- Increased innovation outside the U.S.

- Faster AI model deployments

AI is not slowing down, and neither should your security strategy. The rapid adoption of DeepSeek usage serves as a powerful case study in AI security. CISOs must be prepared for the next wave of AI applications, implementing proactive measures to protect enterprise data while enabling innovation.

The future of AI in the enterprise is unfolding now—let’s secure it together.

To learn more about how Netskope can help you protect your data while embracing AI innovation, check out our solution brief on Securing Generative AI.

For existing customers, we have created a DeepSeek Usage Dashboard that helps you better monitor and control DeepSeek usage in your organization. Learn more in our community article here.

Voltar

Voltar

Leia o Blog

Leia o Blog