The term “zero trust” is the lack of implicit trust. When we started with “zero trust,” we no longer trusted users because they weren’t on our network domain. As our staff went remote, we had to input stronger authentication to move from zero trust to some level of implicit trust. The problem is that trust is all or nothing.

Today, the complexities are beyond having remote workers. Often we do not own the endpoint device or contract worker. We don’t control the cloud network. We don’t write or penetration test the hundreds of software-as-a-service (SaaS) applications deployed across the different business units. And with this complexity, the old “zero trust/implicit trust” model is too prohibitive for business agility and too permissive in terms of risk.

Trust issues: Going from zero to adaptive

We need to evolve our thinking around zero trust to a model that’s more adaptive—based on multiple streams of telemetry that are in continuous, real-time flux. This is what we would call continuous adaptive trust. A continuous adaptive trust security architecture gathers telemetry around users, applications, and data from different sources to make adaptive decisions about network risks in real-time. Security becomes more than all or nothing; the architecture can make granular control decisions, not just “allow” or “block.”

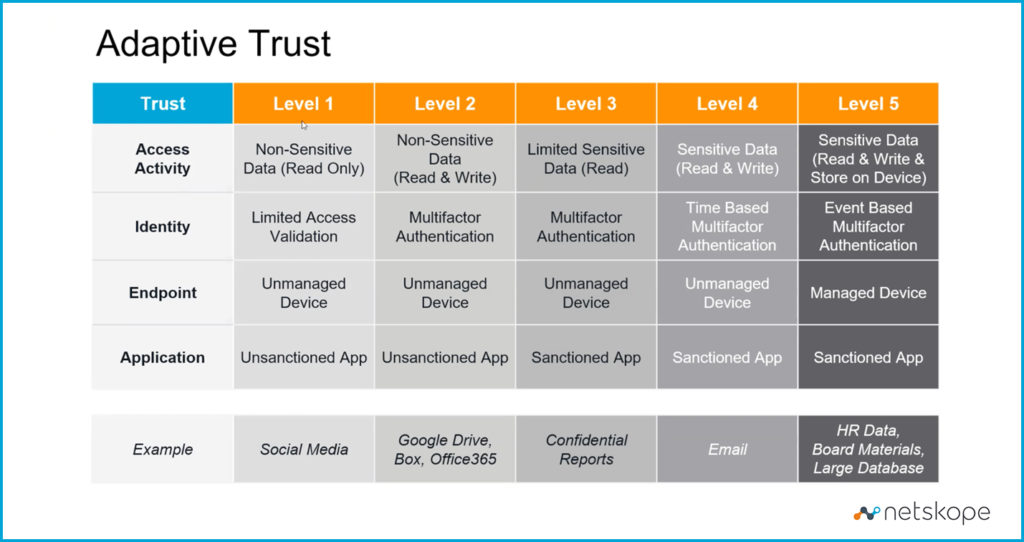

This is best illustrated through an adaptive trust matrix, as pictured in Figure 1 below, where risk is driven by the value of the data being accessed:

Starting with Level 1 Trust,using Social Media as an example, is considered non-sensitive, read-only data, meaning only a low level of trust is needed to allow the transaction. Therefore, the following controls are acceptable:

- Limited access validation

- Unmanaged device (Not a device owned and controlled by the company)

- Unsanctioned application (Not been reviewed and approved by security)

In the second example, the level of controls is higher than in the first. Between Level 1 and Level 5 is a series of trust levels each requiring more strict controls than the prior. You can use this matrix to set real-time rules that control access to information.

In the beginning, the only choice was to either deny or allow the transaction. Better solutions now provide the ability to not only adapt the trust level but also make smart decisions about what type of access is allowed.

Continuous user, data, and application risk

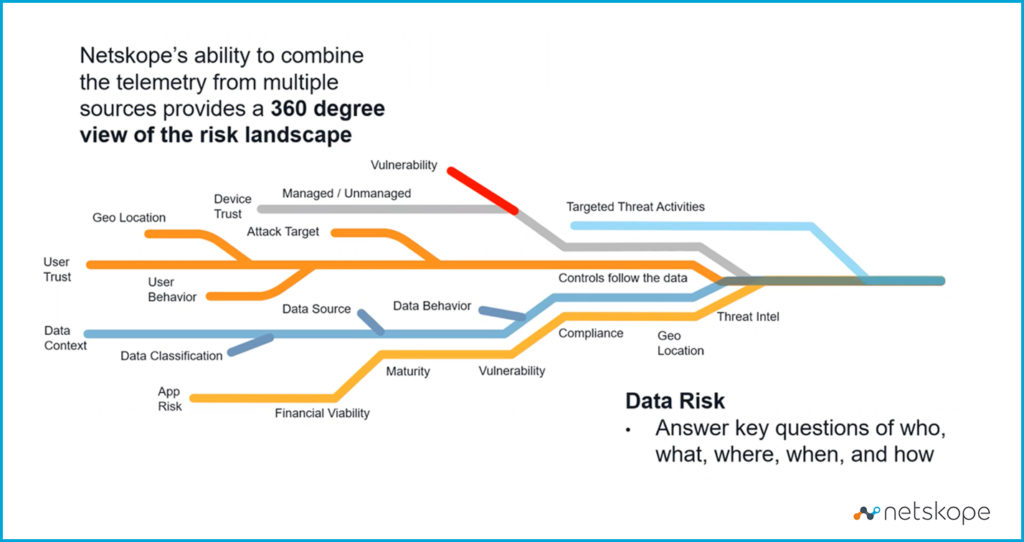

In practice, the ability to deliver adaptive trust relies on a 360-degree view of the risk landscape at all times. We can establish this complex and comprehensive view by continuously monitoring the risk telemetry for users, data, and applications. (See Figure 2 below)

Users: We can start to look at how much we trust the user because we can see the user’s behavior, their geolocation—where they’re coming from— and how active a target they’ve been in the past. Because of their position, they might be likely to be targeted for attack. C-level executives, like CFOs, are frequent targets to trick employees into phishing schemes that exfiltrate money from the company.

Data: We also need to look at the data context. What’s the classification of this data—is it sensitive? What’s the source—where did this data come from? What do we know about this data that brings the context into focus?

Applications: Next, we look at the application level. What’s the financial viability of the application vendor? Is this an established company that resides in an approved country? Has a risk review already been completed? Financial viability, geolocation, and risk review will impact the application’s level of inherent risk.

The maturity level of the application is another factor. Do we have a sense of how it was developed—a strong software development lifecycle process? How mature are the controls around this application? Are there any known vulnerabilities in the application itself? Compliance is also very important. If the application stores sensitive data, does it have compliance certificates (such as HIPAA, PCI, or GDPR—as applicable)? With regard to regulatory and financial compliance, has the developer had a Service Organization Control assessment done? Location is a related issue, especially when you’re talking about sensitive data or tracking GDPR compliance requirements.

Then there’s threat intelligence. What do we have from our threat intelligence feeds to understand this application and its inherent risks? For example, the threat intel where SolarWinds software components were compromised would be relevant if this application were on that list.

In addition to the aforementioned three sources, we can look at the device—is it a managed or an unmanaged device? How vulnerable is this device? Has it been patched or do we know this to be a very unstable device because of whatever operating system or software is running? We can also look at targeted threat activities. Are there any active threats that we’ve been targeted with right now over the organization that might relate to this transaction? Because this is all in real-time, a single transaction is being adapted to all of these different inputs.

Continuous adaptive trust and SASE

With all this relevant information assembled, we’re now looking at risks from all angles and can take smarter actions. And the information we’re using will continuously change over time to better understand the evolving risk landscape in real-time. Who’s doing it? What are they doing? Where are they? When is this occurring? And, how is it occurring?

This kind of trust is really at the heart of SASE architecture. It connects all those different products from across the infrastructure and draws in the disparate telemetry streams in order to continuously make complex trust decisions. Using disparate security products would not provide the ability to share all the telemetry in real-time to make these risk decisions. This requires a single platform with tightly integrated services that can communicate with each other in real-time to be able to make sound risk assessments and apply the right level of control at any given moment.

This article was originally published by United States Cybersecurity Magazine

Voltar

Voltar

Leia o Blog

Leia o Blog