Co-authored by Carmine Clementelli and Jason Clark

In recent times, the rise of artificial intelligence (AI) has revolutionized the way more and more corporate users interact with their daily work. Generative AI-based SaaS applications like ChatGPT have offered countless opportunities to organizations and their employees to improve business productivity, ease numerous tasks, enhance services, and assist in streamlining operations. Teams and individuals can conveniently leverage ChatGPT to generate content, translate text, process large amounts of data, rapidly build financial plans, debug and write code, and other uses.

However, while AI apps have the potential to improve our work, they can also significantly expose sensitive data to external vehicles of data loss and further put organizations at risk of data breaches and non-compliance. As my colleague Yihua Liao, head of Netskope AI Labs, recently pointed out, “by acknowledging and addressing these challenges, security teams can ensure that AI is used responsibly and effectively in the fight against cyber threats.”

Here are some specific examples of how sensitive data can be exposed to ChatGPT and other cloud-based AI apps:

- Text containing PII (personally identifiable information) can be copied and pasted in the chatbot to generate email ideas, responses to customer inquiries, personalized letters, check sentiment analysis

- Health information can be typed in the chatbot to craft individualized treatment plans, and even medical imaging such as CT and MRI scans can be processed and enhanced thanks to AI

- Proprietary source code can be uploaded by software developers for debugging, code completion, readability, and performance improvements

- Files containing company secrets like earnings report drafts, mergers and acquisitions (M&A) documents, pre-release announcements and other confidential data can be uploaded for grammar and writing check

- Financial information like corporate transactions, undisclosed operating revenue, credit card numbers, customers’ credit ratings, statements, and payment histories can leverage ChatGPT for financial planning, documents processing, customer onboarding, data synthesis, compliance, fraud detection etc.

Organizations must take serious countermeasures to safeguard the confidentiality and the security of their sensitive data in our modern cloud-enabled world, by monitoring the use and abuse of risky SaaS applications and instances, limit the exposure of sensitive information through them and secure confidential documents from accidental loss and theft.

How Netskope helps prevent the cyber risks of ChatGPT

It all starts with visibility. Security teams must leverage automated tools that continuously monitor what applications (such as ChatGPT) corporate users attempt to access, how, when, from where, with what frequency etc. It is essential to understand the different levels of risk that each application poses to the organization and have the ability to granularly define access control policies in real-time based on categorizations and security conditions that may change over time.

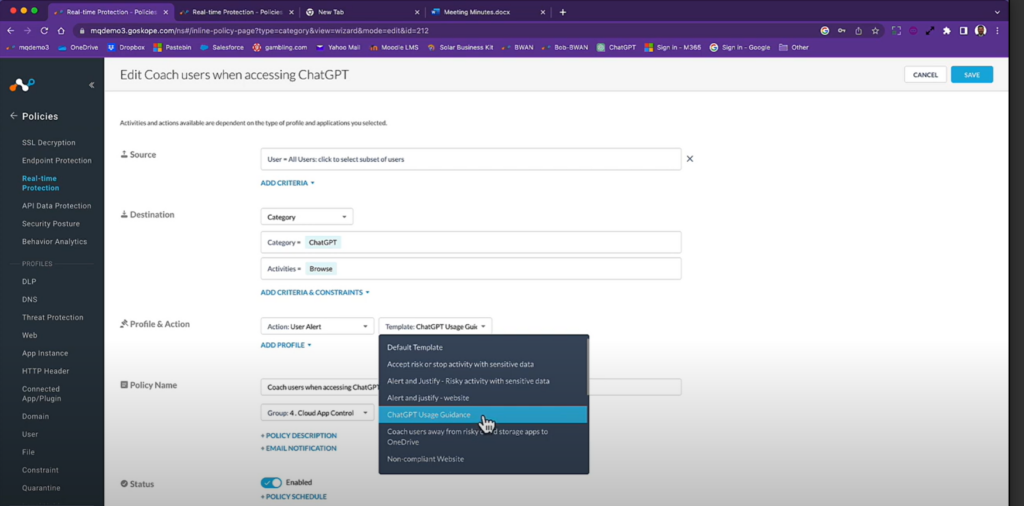

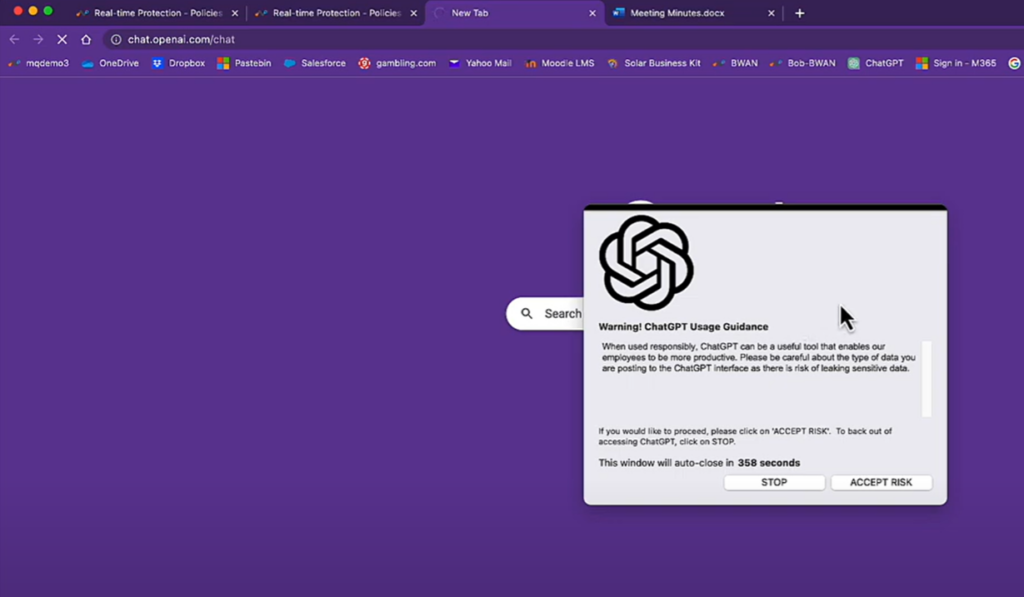

While applications that are more explicitly malicious should be blocked, when it comes to access control, oftentimes the responsibility of the use of applications like ChatGPT should be given to the users, tolerating and not necessarily stopping activities that may make sense to a subset of business groups or to the majority of them. At the same time security teams have the responsibility to make employees aware of applications and activities that are deemed risky. This can mainly be accomplished through real-time alerts and automated coaching workflows, involving the user in the access decisions after acknowledging the risk.

Netskope is a market leader in cloud security and data protection, with over a decade of experience, offering the broadest visibility and the finest control over thousands of new SaaS applications like ChatGPT. Netskope provides flexible security options to control access to generative AI-based SaaS applications like ChatGPT and to automatically protect sensitive data. Examples of access control policies include real-time coaching workflows that are triggered every time users open ChatGPT, such as customizable warning popups offering guidance about the responsible use of the application, the potential risk associated and a request of acknowledgement or justification.

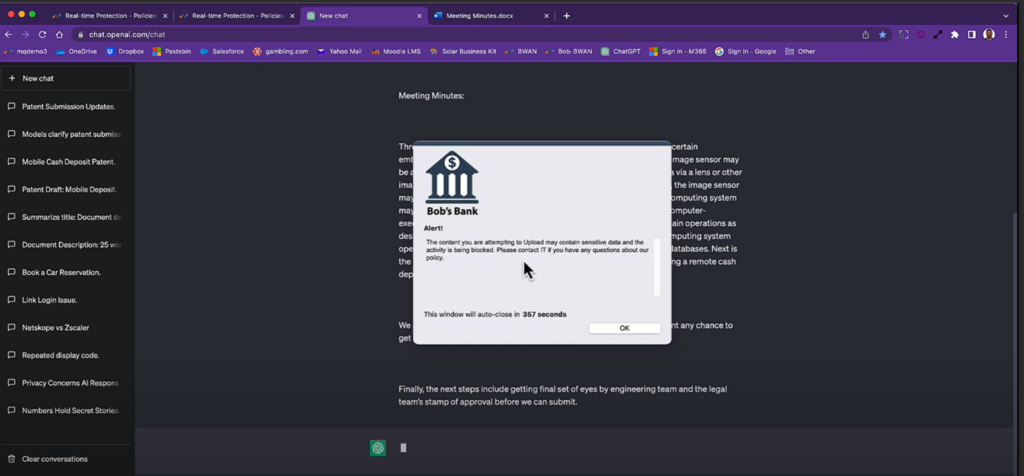

Users make mistakes and may negligently put sensitive data at risk. While access to ChatGPT can be granted, it is paramount to limit the upload and posting of highly sensitive data through ChatGPT and across other potentially risky data exposure vectors in the cloud. This can only be accomplished through modern data loss prevention (DLP) techniques, and Netskope’s highly advanced DLP capabilities are able to automatically identify flows of sensitive data, and categorize sensitive posts with the highest level of precision.

Accuracy ensures that the system only monitors and prevents sensitive data uploads (including files, and pasted clipboard text) through Generative AI-based apps and doesn’t stop harmless queries and safe tasks through the chatbot. Maturity counts, and again, Netskope offers more than a decade of experience and the highest degree of data protection efficacy in cloud-delivered data loss prevention (DLP). With Netskope’s advanced DLP, powered by ML and AI models, thousands of file types, personally identifiable information, intellectual property (IP), financial records and other sensitive data are confidently identified and automatically protected from unwanted and non-compliant exposure.

In addition to selectively stopping uploads and posts of sensitive information to ChatGPT, visual coaching messages can be automated in real-time to provide guidance about data posting violations, inform the user on corporate security policies and allow to mitigate repeated risky behavior over time taking away the burden from security response teams.

To learn more, watch our demo video about safely enabling ChatGPT or visit:

- https://www.netskope.com/netskope-ai-labs

- https://www.netskope.com/products/casb

- https://www.netskope.com/products/data-loss-prevention

Additionally, if you’re interested in hearing more about generative AI and data security, please register for our LinkedIn Live event ChatGPT: Fortifying Data Security in the AI App-ocalypse, featuring Krishna Narayanaswamy, Naveen Palavalli, and Neil Thacker, on Thursday, June 22 at 9:30am PT.

戻る

戻る

ブログを読む

ブログを読む