Three Requirements for Effective Cloud Incident Management

Working with some of our largest customers here at Netskope, I’ve been involved in several implementations of cloud DLP. More than 80 percent of our customers choose to include the Netskope data loss prevention solution and that requires an effective cloud incident management program. Without effective incident management an incident can rapidly disrupt business operations, information security, IT systems, employees or customers and other vital business functions. This is one of the top security concerns for executives when considering cloud migration and how to prepare and manage incidents when something goes wrong. Below are three requirements that I’ve observed at customers with the most successful cloud policy enforcement and DLP programs, used in conjunction with Netskope as their cloud control point.

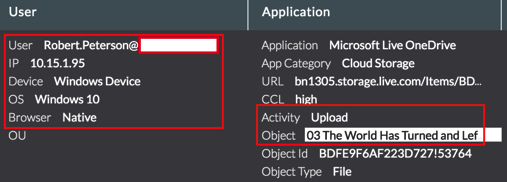

- Contextual information for analysis. A big problem even for smaller companies is the amount of false positives that come in and can quickly overwhelm the response team. Make sure you’re tracking granular information like the type of device a user is on, the actual activity (i.e. download or share), location, network, and more. This information helps inform your cloud policy creation to reduce false positives and also helps you triage when a policy fires. It’s a lot easier to prioritize an incident and take action when comparing one incident that happened on-premises, versus one that happened remotely on an unmanaged device and dealt with sharing sensitive data to an outside party.

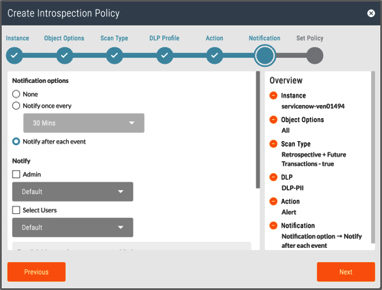

- Finely tuned cloud DLP policies. One of the main challenges in deploying DLP is showing immediate value without drowning yourself in data. Determine the data or information you want to discover, monitor, and protect. This is all about efficient cloud policy creation. When you have visibility into the cloud services and the activities performed by users, the next step is to define policies to enforce your business rules. Policies allow you to enforce an action (like block) based on the cloud services, cloud app categories, users and groups, cloud service activity, and so on. In addition to this, you can also define DLP and threat protection profiles to inspect traffic to prevent sensitive and critical data leaks and exposure, such as are you creating alerts and policies for certain high-risk activities and data? What kinds of activities or potential violation types are you tracking? With the right set of policies in place, you can easily determine what would be valid violations related to your organization and what are normal, everyday activities. You can use the contextual information from our first requirement to benchmark and inform your policy setting. With proper forensics, you can see all the information you need in terms of what your users are doing in the cloud and what you should be looking at. As an example, here are two DLP policies, one for data a rest (Introspection) and one in real time (Inline) that will alert for downloads and uploads of sensitive data like PII.

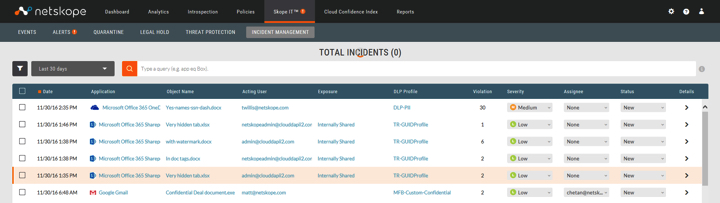

- Automated remediation workflows. Incident management enables you to use policies to find and track DLP violations. When a violation is found, the object is placed in a forensics folder so you can review the specific types of sensitive data that are exposed. An automated cloud policy enforcement program is necessary for getting to a quick resolution and remediation. For the cloud, this is done via workflows that kick off, informing both the user and security admins of what happened. An example of this would be a file being put into quarantine or legal hold because it contains sensitive information. Both the user who uploaded the file into the Cloud Storage app is notified as well as the security admin. The violation registers in the incident management dashboard for the admin to assign a team member to look into or to resolve. Setting these workflows up ensures incidents are resolved and tracked to completion in a reasonable amount of time.

In conclusion, we hope our requirement guidelines provided the necessary insight to hit the ground running. For any organization implementing a cloud security program at scale, these requirements will prove to be helpful in driving these programs to success.

Back

Back

ブログを読む

ブログを読む